The OpenAI Mission

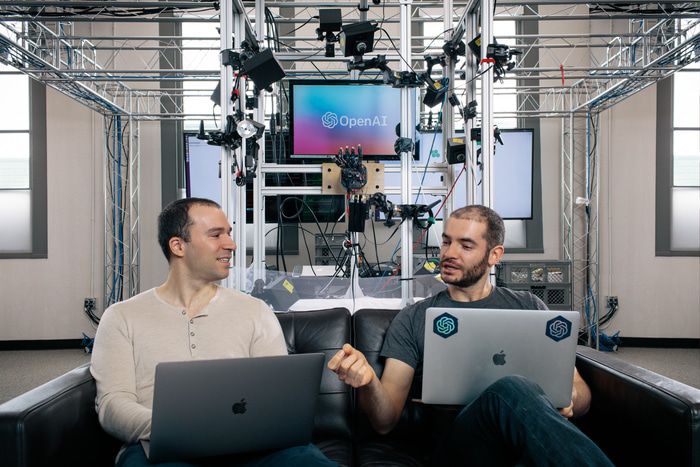

This post is co-written by Greg Brockman (left) and Ilya Sutskever (right).

This post is co-written by Greg Brockman (left) and Ilya Sutskever (right).

We’ve been working on OpenAI for the past three years. Our mission is to ensure that artificial general intelligence (AGI) — which we define as automated systems that outperform humans at most economically valuable work — benefits all of humanity. Today we announced a new legal structure for OpenAI, called OpenAI LP, to better pursue this mission — in particular to raise more capital as we attempt to build safe AGI and distribute its benefits.

In this post, we’d like to help others understand how we think about this mission.

Why now? #

The founding vision of the field of AI was “… to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it”, and to eventually build a machine that thinks — that is, an AGI. But over the past 60 years, progress stalled multiple times and people started thinking of AI as a field that wouldn’t deliver.

Since 2012, deep learning has generated sustained progress in many domains using a small simple set of tools, which have the following properties:

- Generality: deep learning tools are simple, yet they apply to many domains, such as vision, speech recognition, speech synthesis, text synthesis, image synthesis, translation, robotics, and game playing.

- Competence: today, the only way to get competitive results on most “AI-type problems” is through the use of deep learning techniques.

- Scalability: good old fashioned AI was able to produce exciting demos, but its techniques had difficulty scaling to harder problems. But in deep learning, more computational power and more data leads to better results. It has also proven easy (if costly) to rapidly increase the amount of compute productively used by deep learning experiments.

The rapid progress of useful deep learning systems with these properties makes us feel that it’s reasonable to start taking AGI seriously — though it’s hard to know how far away it is.

The impact of AGI #

Just like a computer today, an AGI will be applicable to a wide variety of tasks — and just like computers in 1900 or the Internet in 1950, it’s hard to describe (or even predict) the kind of impact AGI will have. But to get a sense, imagine a computer system which can do the following activities with minimal human input:

- Make a scientific breakthrough at the level of the best scientists

- Productize that breakthrough and build a company, with a skill comparable to the best entrepreneurs

- Rapidly grow that company and manage it at large scale

The upside of such a computer system is enormous — for an illustrative example, an AGI following the pattern above could produce amazing healthcare applications deployed at scale. Imagine a network of AGI-powered computerized doctors that accumulates a superhuman amount of clinical experience, allowing it to produce excellent diagnoses, deeply understand the nuanced effect of various treatments in lots of conditions, and greatly reduce the human error factor of healthcare — all for very low cost and accessible to everyone.

Risks #

We already live in a world with entities that surpass individual human abilities, which we call companies. If working on the right goals in the right way, companies can produce huge amounts of value and improve lives. But if not properly checked, they can also cause damage, like logging companies that cut down rain forests, cigarette companies that get children smoking, or scams like Ponzi schemes.

We think of AGI as being like a hyper-effective company, with commensurate benefits and risks. We are concerned about AGI pursuing goals misspecified by its operator, malicious humans subverting a deployed AGI, or an out-of-control economy that grows without resulting in improvements to human lives. And because it’s hard to change powerful systems — just think about how hard it’s been to add security to the Internet — once they’ve been deployed, we think it’s important to address AGI’s safety and policy risks before it is created.

OpenAI’s mission is to figure out how to get the benefits of AGI and mitigate the risks — and make sure those benefits accrue to all of humanity.

The future is uncertain, and there are many ways in which our predictions could be incorrect. But if they turn out to be right, this mission will be critical. If you’d like to work on this mission, we’re hiring!

About us #

Ilya:

I’ve been working on deep learning for 16 years. It was fun to witness deep learning transform from being a marginalized subfield of AI into one the most important family of scientific advances in recent history. As deep learning was getting more powerful, I realized that AGI might become a reality on a timescale relevant to my lifetime. And given AGI’s massive upside and significant risks, I want to maximize the positive parts of this impact and minimize the negative.

Greg:

Technology causes change, both positive and negative. AGI is the most extreme kind of technology that humans will ever create, with extreme upside and downside. I work on OpenAI because making AGI go well is the most important problem I can imagine contributing towards. Today I try to spend most of my time on technical work, and also work to spark better public discourse about AGI and related topics.